Self-hosting done right with Ansible & Docker

Learning infrastructure automation the hard way - from multi-node cluster-f*ck to a production-ready, single-node setup with Ansible.

Like most developers, I’ve spent years using cloud services. AWS, DigitalOcean, Vercel—they’re convenient, predictable, and someone else’s problem when things break.

But there’s an undeniable appeal to owning your infrastructure. Full control, no vendor lock-in, and the satisfaction of building something from the ground up.

So, naturally, I decided to build my own self-hosted infrastructure. How hard could it be?

Spoiler alert: Very f*cking hard.

TL;DR: the complete, up-to-date configuration for my entire setup is available in this GitHub repo. I’ll be updating it continuously as I learn and discover new things about self-hosting.

Some Context

This wasn’t my first attempt at self-hosting. I’ve deployed Coolify on DigitalOcean for quick app deployments and set up Mailcow on Hetzner for email campaigns. I was already running most of the services I’ll mention in my local Docker setup.

But Docker on Windows/WSL is a pain. The networking quirks, file system performance issues, and random crashes pushed me to find a more robust solution.

The dream was simple: reliable, automated infrastructure that I could scale and maintain with ease.

Mistake #1: Over-Engineering with Kubernetes and Docker Swarm

Like any developer who’s read too many articles about microservices, I started with what seemed like the “right” solution: K3s, a lightweight Kubernetes distribution.

K3s cluster architecture - Source: https://k3s.io/img/how-it-works-k3s-revised.svg

The installation was deceptively simple:

curl -sfL https://get.k3s.io | sh -Within minutes, I had a single-node cluster. But when things went wrong, I was lost. With zero kubectl experience, debugging was a nightmare of get pods, describe pod, and logs. The vast Kubernetes ecosystem assumes a foundational knowledge I simply didn’t have. I was drowning in complexity before I even deployed a single application.

So, I pivoted to what I thought would be simpler: a multi-node Docker Swarm cluster. High availability! Load balancing! Scalability! All the buzzwords that get infrastructure engineers excited.

# My second ambitious (and flawed) setup

docker swarm init --advertise-addr 192.168.1.100

docker swarm join --token SWMTKN-xxx 192.168.1.100:2377I had grand plans for Swarm, too:

- 3 manager nodes for high availability

- Multiple worker nodes for redundancy

- Traefik for load balancing

- Shared storage with NFS or GlusterFS

What I got was another lesson in complexity. High availability is great in theory, but a nightmare to implement for stateful services like databases without a cloud provider’s help. Multi-node networking is notoriously unreliable, and don’t even get me started on storage.

Key Realization #1: Powerful tools don’t help if you don’t understand the fundamentals. Most self-hosted setups don’t need the complexity of Kubernetes or Swarm. A single, powerful node is often more than enough.

The Great Simplification

After weeks of battling my over-engineered setups, I embraced simplicity.

While horizontal scaling is the cloud standard, you can’t beat the simplicity of vertical scaling for self-hosting. Most providers now offer one-click scaling with minimal downtime.

My new philosophy:

- Single-node deployment: One powerful server is easier to manage.

- Essential services only: No experimental features.

- Docker Compose: Simple, declarative service management.

- UFW & Tailscale: Basic firewalling and secure remote access (port 22 blocked).

The difference was night and day. Instead of debugging cluster coordination, I could focus on making my services work.

Docker networking with UFW still has its quirks, but the community has provided excellent solutions like ufw-docker, which I use to manage firewall rules for my containers. You set up Traefik, open ports 80 and 443, configure Let’s Encrypt, and deploy your services with Docker Compose.

For added security, you can use CrowdSec for intrusion prevention or proxy traffic through Cloudflare and create UFW rules to only allow Cloudflare IPs.

Now, the challenge shifted to automating the initial setup. Manually hardening Ubuntu, installing Docker, and configuring firewalls is tedious and error-prone.

Enter Infrastructure as Code

This is where I discovered Ansible. Instead of documenting setup steps in Markdown files that quickly go stale, I could codify my entire server configuration.

# A snippet from my Ansible playbook for basic server hardening

- name: Update apt cache and upgrade packages

apt:

update_cache: yes

upgrade: full

- name: Install essential packages

apt:

name: ['curl', 'git', 'htop', 'ufw', 'fail2ban']

state: present

- name: Configure UFW default policies

ufw:

direction: '{{ item.direction }}'

policy: '{{ item.policy }}'

loop:

- { direction: 'incoming', policy: 'deny' }

- { direction: 'outgoing', policy: 'allow' }The beauty of Ansible is its idempotency—I can run the same playbook multiple times without breaking anything. It’s perfect for those “did I remember to install Docker?” moments.

Practical Example: Solving the Docker + UFW Puzzle

Docker’s tendency to bypass UFW rules is a well-known issue that can unintentionally expose your services. Here’s how I solved it using the ufw-docker script:

# 1. Install the ufw-docker script

sudo wget -O /usr/local/bin/ufw-docker \

https://github.com/chaifeng/ufw-docker/raw/master/ufw-docker

sudo chmod +x /usr/local/bin/ufw-docker

# 2. Let the script manage Docker's firewall rules

ufw-docker install

# 3. Now, you can manage container access with standard UFW rules

ufw route allow proto tcp from any to any port 80

ufw route allow proto tcp from any to any port 443Traefik Configuration Example

Here’s my go-to reverse proxy setup with automatic SSL:

# docker-compose.yml for Traefik

version: '3.8'

services:

traefik:

image: traefik:v3.1

container_name: traefik

restart: unless-stopped

command:

- '--api.dashboard=true'

- '--providers.docker=true'

- '--providers.docker.exposedbydefault=false'

- '--entrypoints.web.address=:80'

- '--entrypoints.websecure.address=:443'

- '--certificatesresolvers.letsencrypt.acme.httpchallenge=true'

- '--certificatesresolvers.letsencrypt.acme.httpchallenge.entrypoint=web'

- '--certificatesresolvers.letsencrypt.acme.email=your-email@example.com'

- '--certificatesresolvers.letsencrypt.acme.storage=/letsencrypt/acme.json'

ports:

- '80:80'

- '443:443'

volumes:

- /var/run/docker.sock:/var/run/docker.sock:ro

- ./letsencrypt:/letsencrypt

labels:

- 'traefik.enable=true'

- 'traefik.http.routers.dashboard.rule=Host(`traefik.yourdomain.com`)'

- 'traefik.http.routers.dashboard.entrypoints=websecure'

- 'traefik.http.routers.dashboard.tls.certresolver=letsencrypt'

- 'traefik.http.middlewares.dashboard_auth.basicauth.users=user:$$apr1$$...$$' # Add Basic Auth for security

- 'traefik.http.routers.dashboard.middlewares=dashboard_auth'My Ansible Project Structure

My current Ansible setup is organized into two main parts: one for my remote server and one for my home server. The remote server configuration, which handles public-facing services, follows this structure:

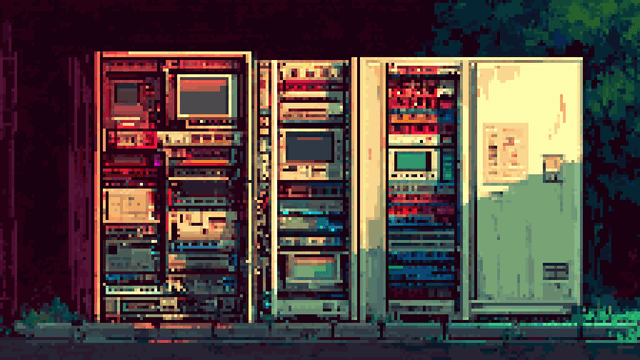

The Ansible project structure

Here’s a simplified example from my docker_installation role:

# tasks/main.yml in roles/docker_installation

---

- name: Install Docker dependencies

become: true

ansible.builtin.apt:

name: - apt-transport-https - ca-certificates - curl - gnupg - lsb-release

state: present

update_cache: true

- name: Add Docker GPG key

become: true

ansible.builtin.get_url:

url: https://download.docker.com/linux/ubuntu/gpg

dest: /etc/apt/trusted.gpg.d/docker.asc

mode: '0644'

force: true

- name: Add Docker repository

become: true

ansible.builtin.apt_repository:

repo: 'deb [arch=amd64 signed-by=/etc/apt/trusted.gpg.d/docker.asc] https://download.docker.com/linux/ubuntu {{ ansible_distribution_release }} stable'

state: present

- name: Install Docker Engine

become: true

ansible.builtin.apt:

name: - docker-ce - docker-ce-cli - containerd.io - docker-buildx-plugin - docker-compose-plugin

state: present

- name: Ensure Docker service is started and enabled

become: true

ansible.builtin.systemd:

name: docker

state: started

enabled: true

My Current Production Setup

My infrastructure currently consists of a bare-metal server from OVHcloud and an old desktop running Ubuntu Server at home.

The remote server handles public services:

- Traefik: Reverse proxy and automatic SSL.

- CrowdSec: Intrusion prevention.

- Netdata: System monitoring.

- Tailscale: Secure internal networking.

- Umami: Web analytics.

- A few other services I’ll cover in future posts.

The home server runs internal services:

- Cloudflared: Exposes select services to the internet securely.

- TrueNAS: Storage and backups.

- AdGuard Home: Network-wide ad blocking.

- Vaultwarden: Password and secret management.

- Home Assistant: Home automation.

- Immich: Photo management.

- Frigate: Home security NVR.

- Plex: Media streaming.

- n8n: Business and workflow automation.

All of this is managed with Ansible playbooks that can:

- Provision a new server from scratch in under 10 minutes.

- Apply security hardening automatically.

- Deploy applications with consistent configurations.

- Handle secrets securely.

What’s Next

This is just the beginning of my self-hosting journey. In upcoming posts, I’ll dive deeper into:

- ✅ How I setup my remote server in under 10 minutes.

- Why I chose Cloudflare Tunnels over Dynamic DNS.

- The benefits of self-hosting your web analytics instead of using Google Analytics.

- Setting up a local DNS server with custom certificates for

.homedomains. - Configuring TrueNAS and Docker contexts for seamless deployments.

- Building the complete “arr-stack”: Sonarr, Radarr, Prowlarr, qBittorrent, and Overseerr.

The key lesson? Start simple, automate early, and scale only when you absolutely need it.

Infrastructure as Code isn’t just for large enterprises. For personal projects, the ability to recreate your entire setup with a single command is a superpower.

What’s your experience with self-hosting? Are you facing similar challenges? Let me know on Twitter—I’d love to hear your stories and solutions.